NativQA

NativQA: Multilingual Culturally-Aligned Natural Query for LLMs

Natural Question Answering (QA) datasets play a crucial role in evaluating the capabilities of large language models (LLMs), ensuring their effectiveness in real-world applications. Despite the numerous QA datasets that have been developed, there is a notable lack of region-specific datasets created by native users in their own languages. This gap hinders the effective benchmarking of LLMs for regional and cultural specificities and limits the development of fine-tuned models.

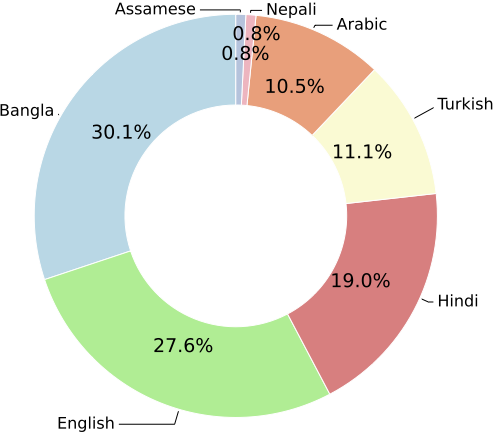

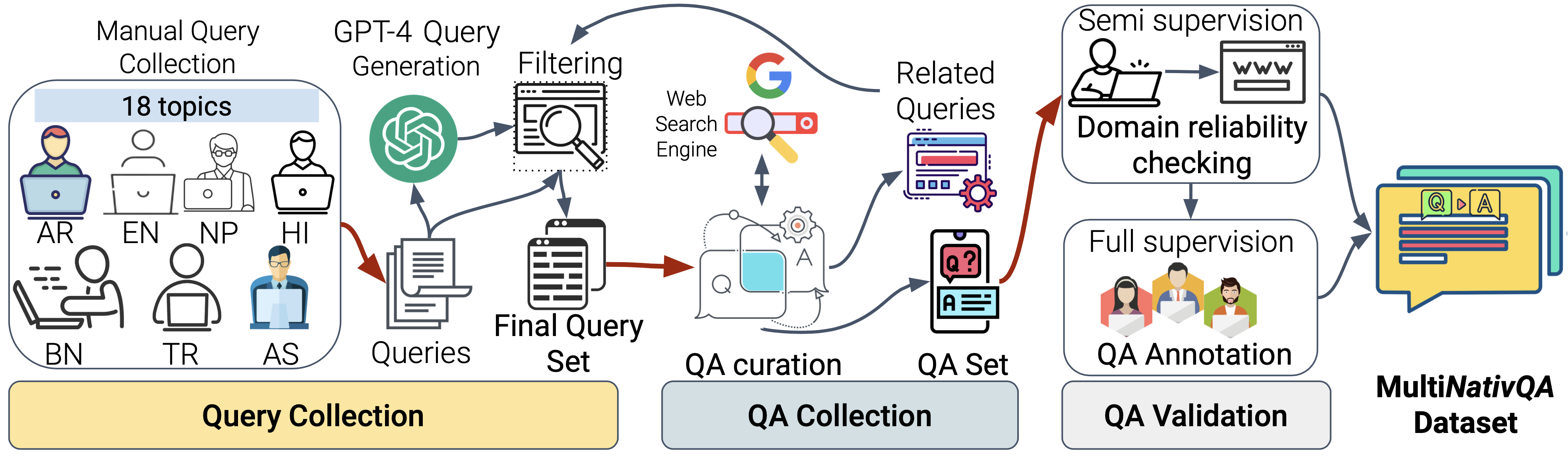

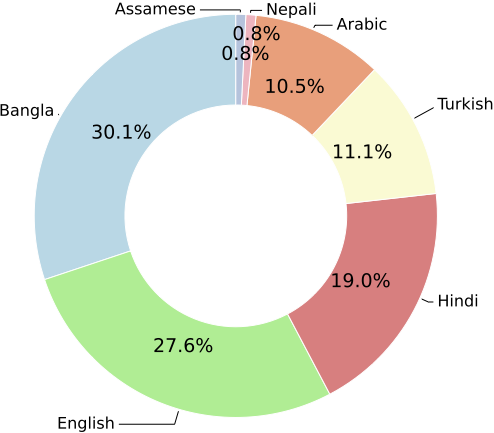

In this study, we propose a scalable, language-independent framework, NativQA, to seamlessly construct culturally and regionally aligned QA datasets in native languages for LLM evaluation and tuning. We demonstrate the efficacy of the proposed framework by designing a multilingual natural QA dataset, MultiNativQA, consisting of approximately 64k manually annotated QA pairs in seven languages, ranging from high- to extremely low-resource languages, based on queries from native speakers from nine regions covering 18 topics.

We benchmark both open- and closed-source LLMs using the MultiNativQA dataset. Additionally, we showcase the framework’s efficacy in constructing fine-tuning data, especially for low-resource and dialectally rich languages. Both the NativQA framework and the MultiNativQA dataset have been made publicly available to the community.

MultiNativQA Dataset

Statistics

Topics Coverage

| Selected topics used as seed to collect manual queries. |

|---|

| Animal, Business, Cloth, Education, Events, Food & Drinks, General, Geography, Immigration Related, Language, Literature, Names & Persons, Plants, Religion, Sports & Games, Tradition, Travel, Weather |

Language Coverage

Arabic, Assamese, Bangla, English, Hindi, Nepali, Turkish

news

| Nov 13, 2025 | Fostering Native and Cultural Inclusivity in LLMs |

|---|---|

| Jan 23, 2025 | Multilingual and Multimodal Cultural Inclusivity in LLMs |

| Nov 13, 2024 | Fostering Native and Cultural Inclusivity in LLMs |

latest posts

| Jul 16, 2024 | Arabic Language Technologies – Medium |

|---|